Designing Compound Objects Through 3D Printing and Augmented Reality

Smart manufacturing, also known as Industry 4.0, involves automated yet collaborative processes – humans and machines working together. In a new study entitled “Design and Interaction Interface using Augmented Reality for Smart Manufacturing,” a pair of researchers used augmented reality to develop a design and interaction interface for smart manufacturing.

In Distributed Numerical Control (DNC) systems, each machine tool, such as a mill or 3D printer, is connected to a machine control unit (MCU), like a computer. The MCU controls the machine tool by sending a part program, which is a set of instructions to be carried out by the machine.

“To make our factory smart, one way is to have the machine operator perform the part-programming task at the machine tool, which is called manual data input (MDI),” the researchers explain. “A minimum of training in part programming is required of the machine operator. However, the current development requires the operator to enter the part geometry data and motion commands manually into the MCU, and thus MDI can only handle simple operations and parts. There is a critical need to have appropriate tool of human-machine interaction (HMI) to support interaction and customization in SmartMFG environment.”

The question the researchers ask is: “What should the HMI system look like such that individuals can access and interact within smart manufacturing to design and manufacture custom products?” They then hypothesize that “AR-based design interfaces that communicate with MCU directly will increase the degree of interaction and the complexity of instructions performed in MDI systems.”

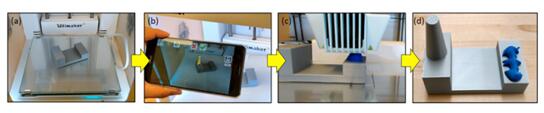

To test the hypothesis, the researchers developed a prototyping system consisting of an augmented reality tablet device and an Ultimaker 3D printer as a machine tool.

”Based on an Asus Zenfone smart phone powered by Google Tango AR toolkit, our software system leverages the depth images of the physical object and allows users to interact virtually with the build platform,” they report. “Users can sketch 2D curves on the existing object and convert the 2D curves into 3D shapes via simple interactions. After the designs are created, our software system will convert them into a set of instructions that controls and directs the machine tool. The instructions are sent directly to the 3D printer with the wireless network, so that the newly added 3D shapes will be printed onto the existing objects.”

The communcation of the Zenphone device was established with the Ultimaker 3 3D printer through a Wi-Fi network. The physical coordinates system and the AR environment’s coordinates system were aligned by a calibration based on QR code. The researchers also implemented an AR-based design system that allowed users to design shapes onto existing objects. The Zenphone device acquires 3D point cloud data, which then allows users to sketch and edit 2D curves and project those curves onto existing planes.

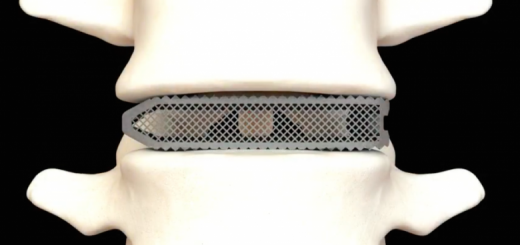

The researchers tested the system by 3D printing two objects onto already existing objects. Essentially, users were able to design new objects in augmented reality, projecting them onto an existing object like a block. The system the researchers designed was able to take that 2D design and turn it into a 3D model, which was then communicated to the 3D printer and printed onto the block. The research combines augmented reality and 3D printing in a way that enables designers to create customized products around existing reference points, which is difficult to do with conventional CAD systems.

Source: 3dprint

Recent Comments